Hello! We finally (and sadly) arrive to the last week of this course, so, for this week we will learn a little about the 5 most important synthesis modules and its usage: Oscillator, Filter, Amplifier, Envelope, and LFO, according to lesson for week 6 of Introduction to Music Production at Coursera.org. Let's start!

The Oscillator (Voltage Controlled Oscillator - VCO)

The Oscillator is the module which creates the sound. This sound is based on different geometric waveforms, so the sound created depends on the selected shape of the waveform. When a note is played, in this module is where the signal will begin before feeding through the other modules of the synth. The most common waveforms are:

The Filter (Voltage Controlled Filter - VCF)

After passing the Oscillator the sound then enters the Filter, module who blocks some frequencies while let others go through. Filter type is most often a Low Pass Filter, used to reduce the high end drastically.

The other common filter types include High Pass, Band Pass and Notch. Whichever type of filter is used however, it can be modulated by adjusting the filter Cutoff (point on the frequency spectrum at which the filter begins to take effect). This modulation or sweeping movement of the Cutoff can create sounds that start bright and end dull for example, by sweeping the Cutoff of a Low Pass Filter across the frequency spectrum from high to low. These adjustments can be made to perfect a particular patch, or modulated over time to create a dramatic effect.

The Amplifier (Voltage Controlled Amplifier - VCA)

After the signal is modified by the filters, then passes through to the Amplifier which is usually a unity-gain amplifier which varies the amplitude of a signal in response to an applied control voltage. The response curve may be linear or exponential.

The Amplifier determines the instantaneous volume level of a played note, and it quiets the output at the end of the note. A VCA may be referred to as being "two quadrant" or "four quadrant" in operation. In a two quadrant VCA, if the control voltage input drops to less than or equal to zero, the VCA produces no output. In a four quadrant VCA, once the control voltage drops below zero, the output gain rises according to the absolute value of the control voltage, but the output is inverted in phase from the input. A four quadrant VCA is used to produce amplitude modulation and ring modulation effects.

The Envelope

The Envelope modulator is attached to the Amplifier to control exactly how it moves by adjusting the parameters of ADSR and so reference is made to both of them together in this analogy. Although Envelopes can control different parameters, the last one in the synthesiser will usually be the Amplitude Envelope. The ADSR controls are:

Envelopes can be used really well on an existing patch to modify and perfect it until it sounds just right.

Low Frequency Oscillator (LFO)

The final of the five main modulators is designed to control any other parameter within the synthesiser. Unlike the envelope which starts and finishes, the LFO is cyclical (like a rhythmic pulse) and because this means that it repeats over time, it can be used to control the Voltage Controlled Oscillator (VCO), creating changes in pitch to achieve Vibrato. It can also control the Amplitude, creating Tremolo. And it can control the Cutoff frequency of the Filter, creating a ripple effect.

We cannot hear sound through an LFO and so it will always require a Destination or output because Low actually indicates Lower that the range of human hearing (below 20Hz). Once this Source to Destination configuration has been arranged within the Synth, the amount or extent of modulation can be applied and it can be applied in various ways or “waves”. Because the LFO is an Oscillator it too is set at different waveforms. When controlling the the VCO for example it can create different sounding vibratos depending on the wave shape. And increases to the LFO amount increases the frequency variations in the VCO response.

Reflections

It's a little difficult to describe the tones and textures of a sound or note produced by a synth, but once we understand how these modules work and interact we can use synthesis as a type of language to accurately express and emulate almost any type of sound. I hope you find useful this simplified explanation of the synthesis modules as it was for me. Thanks very much for reading again, don't forget to comment.

Parts of a Synth - Lennar Digital Synth One Plugin over Cubase

The Oscillator (Voltage Controlled Oscillator - VCO)

The Oscillator is the module which creates the sound. This sound is based on different geometric waveforms, so the sound created depends on the selected shape of the waveform. When a note is played, in this module is where the signal will begin before feeding through the other modules of the synth. The most common waveforms are:

- Sine Wave – Representing a single frequency with no harmonics. Sounds very clear.

- Sawtooth Wave – The sound is often fuller as it contains all harmonics. It produces a sharp, biting tone.

- Square Wave – Produces a reedy, hollow sound as it is missing the even harmonics.

- Pulse Wave – Produces a similar sound to the square wave but has the unique ability to have its width modulated.

- Triangle Wave – Produces sound like a filtered square wave however the higher harmonics roll off much faster.

- Noise - If the vibrations do not follow a discernible pattern, the waveform can then be represented randomly and the sound is called noise.

Oscillator - Lennar Digital Sylenth 1

The Filter (Voltage Controlled Filter - VCF)

After passing the Oscillator the sound then enters the Filter, module who blocks some frequencies while let others go through. Filter type is most often a Low Pass Filter, used to reduce the high end drastically.

The other common filter types include High Pass, Band Pass and Notch. Whichever type of filter is used however, it can be modulated by adjusting the filter Cutoff (point on the frequency spectrum at which the filter begins to take effect). This modulation or sweeping movement of the Cutoff can create sounds that start bright and end dull for example, by sweeping the Cutoff of a Low Pass Filter across the frequency spectrum from high to low. These adjustments can be made to perfect a particular patch, or modulated over time to create a dramatic effect.

Filter - Lennar Digital Sylenth 1

The Amplifier (Voltage Controlled Amplifier - VCA)

After the signal is modified by the filters, then passes through to the Amplifier which is usually a unity-gain amplifier which varies the amplitude of a signal in response to an applied control voltage. The response curve may be linear or exponential.

The Amplifier determines the instantaneous volume level of a played note, and it quiets the output at the end of the note. A VCA may be referred to as being "two quadrant" or "four quadrant" in operation. In a two quadrant VCA, if the control voltage input drops to less than or equal to zero, the VCA produces no output. In a four quadrant VCA, once the control voltage drops below zero, the output gain rises according to the absolute value of the control voltage, but the output is inverted in phase from the input. A four quadrant VCA is used to produce amplitude modulation and ring modulation effects.

The Envelope

The Envelope modulator is attached to the Amplifier to control exactly how it moves by adjusting the parameters of ADSR and so reference is made to both of them together in this analogy. Although Envelopes can control different parameters, the last one in the synthesiser will usually be the Amplitude Envelope. The ADSR controls are:

- Attack time - the time taken for initial run-up from nil to peak level, beginning when the key is first pressed

- Decay time - the time taken for the subsequent run down from the attack level to the designated sustain level

- Sustain level - the level during the main sequence of the sound’s duration, until the key is released

- Release time - the time taken for the level to decay from the sustain level to zero after the key is released

Envelopes can be used really well on an existing patch to modify and perfect it until it sounds just right.

Amplifier - Envelope - Lennar Digital Sylenth 1

Low Frequency Oscillator (LFO)

The final of the five main modulators is designed to control any other parameter within the synthesiser. Unlike the envelope which starts and finishes, the LFO is cyclical (like a rhythmic pulse) and because this means that it repeats over time, it can be used to control the Voltage Controlled Oscillator (VCO), creating changes in pitch to achieve Vibrato. It can also control the Amplitude, creating Tremolo. And it can control the Cutoff frequency of the Filter, creating a ripple effect.

We cannot hear sound through an LFO and so it will always require a Destination or output because Low actually indicates Lower that the range of human hearing (below 20Hz). Once this Source to Destination configuration has been arranged within the Synth, the amount or extent of modulation can be applied and it can be applied in various ways or “waves”. Because the LFO is an Oscillator it too is set at different waveforms. When controlling the the VCO for example it can create different sounding vibratos depending on the wave shape. And increases to the LFO amount increases the frequency variations in the VCO response.

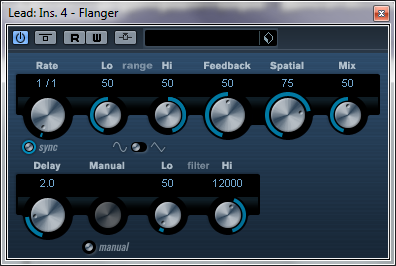

LFO - Lennar Digital Sylenth 1

It's a little difficult to describe the tones and textures of a sound or note produced by a synth, but once we understand how these modules work and interact we can use synthesis as a type of language to accurately express and emulate almost any type of sound. I hope you find useful this simplified explanation of the synthesis modules as it was for me. Thanks very much for reading again, don't forget to comment.